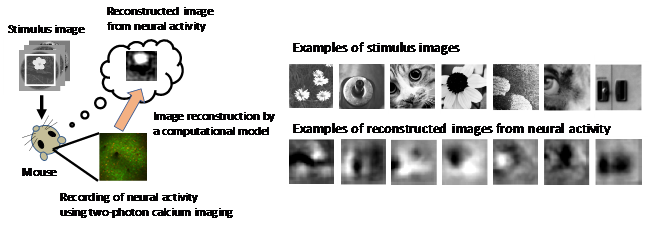

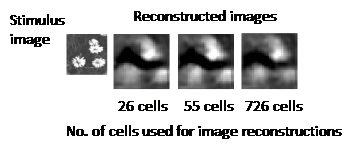

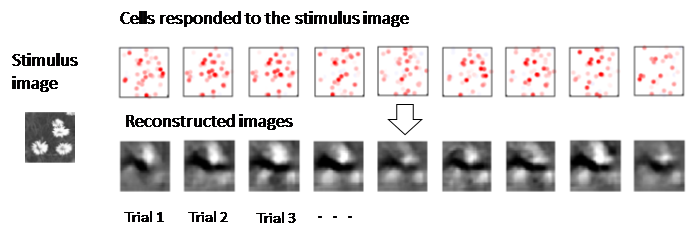

One of the more remarkable talents of the mammalian brain is its ability to convert photons from visual scenes into interpretable patterns of neural signals in the visual cortex. The rules of this conversion of information have been well studied since the mid-20th century and the advent of direct electrical recordings from the brain. In the early 2000s, visual cortex was reported to employ what is called “sparse coding” where only a relatively small group of highly active neurons are recruited to represent a visual scene. However, the sparse coding model raised some perplexing questions. How do such a small number of neurons ensure reliable image representation? Wouldn't there be too few cells to represent complex scenes? And of relevance to the person viewing a scene, how do higher brain areas use an optimal decoder to “read-out” the sparse activity patterns in visual cortex and extract meaning from the visual world? A research team at The University of Tokyo Graduate School of Medicine and the International Research Center for Neurointelligence led by Kenichi Ohki investigated these problems using new methods drawn from cellular-scale imaging and reconstructive modeling of neuronal responses mirroring how the brain might read-out sparse activity patterns. The study was recently published in the journal Nature Communications. The researchers first confirmed that sparse coding indeed occurred in visual cortex in response to natural images like flowers or animals using their recording method of two-photon calcium imaging of mice that had been introduced with a chemical or a genetically encoded calcium indicator. Interestingly, the researchers found that single images could be decoded from a surprisingly small number of highly active neurons (Fig. 1) and adding further neuronal activity made the images worse (Fig. 2). The reason for this optimal decoding was the high diversity of overlapping response properties for each individual cell, termed receptive fields, that provided full, redundant coverage of the range of visual features in the natural images. These properties allowed the visual cortex to remain robust to trial to trial variability for coverage of a given receptive field, yet maintain reliable representation using only a few highly active neurons (Fig. 3). These findings highlight the remarkable efficiency of the brain for information processing. A recent major advance in AI uncovered a similar mechanism called “drop out” in deep network learning, where a virtual neuron is randomly removed from the network to avoid overfitting. However, the biological visual cortex remains far more effective in learning and memory than the best computer algorithms, so further principles are expected to emerge from ongoing studies that may one day help scientists to truly understand how the brain “sees”.

Correspondent: Charles Yokoyama, Ph.D., IRCN Science Writing Core

Reference: Yoshida, T., Ohki, K., (2020) Natural images are reliably represented by sparse and variable populations of neurons in visual cortex. Nature Communications 11(1):872, DOI: 10.1038/s41467-020-14645-x

Media Contact: The author is available for interviews in English.

Professor Kenichi Ohki, M.D., Ph.D.

Graduate School of Medicine

International Research Center for Neurointelligence (IRCN)

The University of Tokyo

Mayuki Satake

Public Relations

International Research Center for Neurointelligence (IRCN)

The University of Tokyo Institutes for Advanced Study

press@ircn.jp