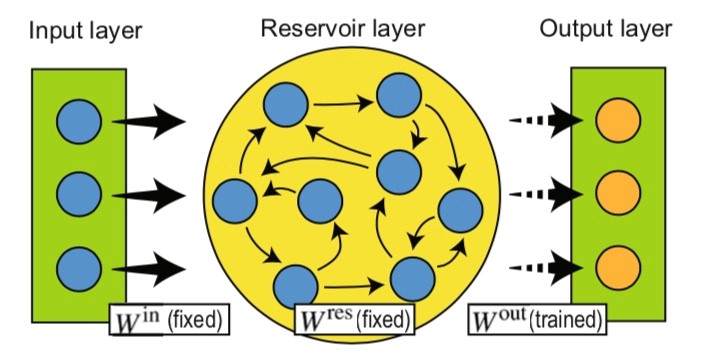

Among the candidate frameworks for next generation AI, reservoir computing is proving to be an intriguing contender. Conventional machine learning relies on training recurrent neural networks organized in a linear stack of pancake-like layers. Reservoir computing (RC), in contrast, replaces the layers with a nonlinear dynamical system with fixed processing rules and training of the output. On the surface RC looks like a black box algorithm due to the simple input and output rules, but the internal parameters are tunable. In a breakthrough study, a team of researchers from the International Research Center for Neurointelligence and Institute of Industrial Science at The University of Tokyo, Japanese IT company NEC, and Kyushu University, report large improvements in the efficiency of RC after introducing key changes to internal parameters. The innovations will be useful for an emerging class of AI called edge computing where resources are deployed in low-power end-user environments.

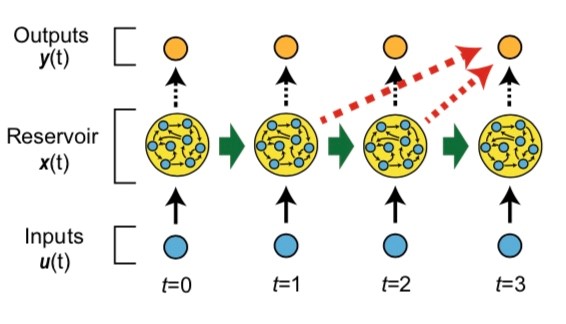

Published in Scientific Reports, the team achieved greater RC efficiency by redesigning the internal connections in the standard reservoir model. Connections from past and/or drifting states in the time course of the computations were added to connect to the output layer. This change channeled the reservoir’s useful state information to the output, while reducing the size of the reservoir, but without sacrificing computing efficiency. An advantage of the new model is that it is largely analyzable -- a goal of next generation AI -- and its mechanism was vetted by a procedure called Information Processing Capacity (IPC). In IPC, parameters of the model were shown to be tunable to improve learning performance on real world tasks. Finally, the algorithm was tested for benchmark performance in several standard prediction tasks, called generalized Henon Map and NARMA. In both tasks, the size of the reservoir could be reduced without impairing performance, validating the novel connection matrix.

The type of network miniaturization achieved in proof here is believed by computer experts to be essential for the emergence of so-called edge computing, where data processing and cloud storage moves closer or even into end-user devices for local computing and the Internet of Things (IoT). Small reservoirs instantiated closer to end users may enable improvements in computing speed, performance, and functionality. The low power consumption of RC, unlike current “deep” machine learning, may allow integration in embedded systems. Reservoir networks will also increasingly be explored for parallels in the human brain. Some brain areas appear to show similarities to reservoir type (nearly random) architecture but more work will be needed to determine if cognition can emerge from reservoir-type networks. If the current study is replicated by other groups, the connection of past states in the network to its output will be a candidate brain mechanism worthy of exploration.

Correspondent: Charles Yokoyama, Ph.D., IRCN Science Writing Core

Reference: Sakemi, Y., Morino, K., Leleu, T., Aihara, K. (2020) Model-size reduction for reservoir computing by concatenating internal states through time. Scientific Reports. Online publication: December 11, 2020. DOI: 10.1038/s41598-020-78725-0

Figure 1: Reservoir computing network architecture. The reservoir contains randomly connected neurons, fixed connections, and a trained output (see Reference Figure 1).

Figure 2: Concatenation of past states to the reservoir output. The reservoir is modeled as a series of time points with past states connected to the output layer (see Reference Figure 2a).

Media Contact: The author is available for interviews in English.

University Professor Kazuyuki Aihara, Ph.D.

International Research Center for Neurointelligence (IRCN)

The University of Tokyo Institutes for Advanced Study

Mayuki Satake

Public Relations

International Research Center for Neurointelligence (IRCN)

The University of Tokyo Institutes for Advanced Study

pr@ircn.jp